The uncomfortable truth is this: parts of this article were assembled by artificial intelligence. Before immediate dismissal, consider the process. I spoke my thoughts, essentially dictating this piece, and an AI transcribed and structured the raw output into the letter you are now reading. The AI provided the form ; I provided the intent.

This admission is likely to provoke strong reactions, particularly within journalism. Currently, using AI is widely regarded as a breach of journalistic integrity — a shortcut undermining the core human labor of reporting and writing. While this sentiment isn’t entirely unfounded, the reality is that AI tools are rapidly becoming an unavoidable part of the professional landscape.

The Inevitable Shift

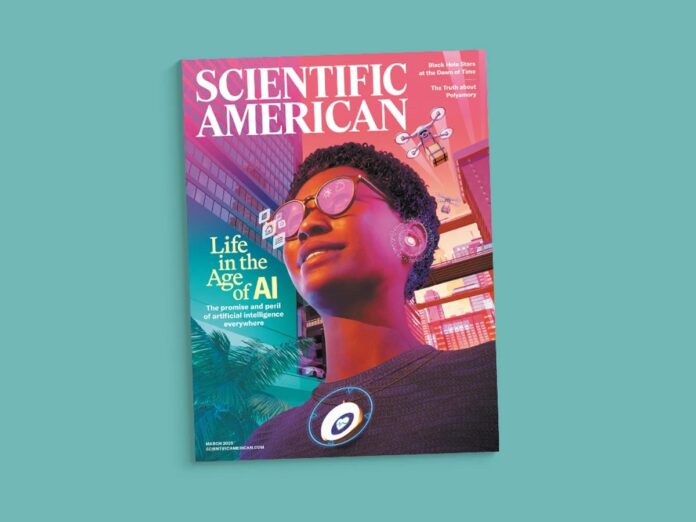

We already live in an AI-saturated world. These tools aren’t vanishing; they are evolving at an exponential pace. The generative AI market has exploded since 2023. A recent 2025 study by Wiley revealed that AI adoption among researchers jumped from 57% in 2024 to 84% in a single year. Over 60% of these researchers are utilizing AI for research and even publication tasks. This isn’t a distant threat; it’s the present.

The situation is ironic. We’ve faced similar anxieties before. The initial reaction to the World Wide Web was one of distrust. Critics argued that real research required time in physical libraries, dismissing web searches as lazy shortcuts yielding unreliable results. Today, that argument feels absurd. We adapted, set boundaries, and defined what constitutes legitimate work versus mere utility.

Collaboration, Not Replacement

The current debate over AI authorship is revealing: if I use Microsoft Word to correct a typo, no one questions my ownership of the text. If an AI reorders my dictated sentences for clarity, where is the line? The distinction is becoming blurred.

In fact, the AI that assisted with this article even suggested including a statistic about its own growth in the field — a meta-moment demonstrating the emerging collaborative loop. I approved the suggestion, and that data point now appears in the text. This highlights how tools can offer improvements that human editors then assess and implement.

The question isn’t whether AI will influence journalism, but how. The real issue is not about cheating but about transparency, control, and establishing clear ethical guidelines. We must embrace these tools strategically to shape their application, not fear them into secrecy.

The line between human and machine authorship is already fading. The critical task ahead is defining acceptable use and ensuring responsible integration.

Ultimately, the medium has changed, but the core message remains mine. The question of who truly “wrote” this piece is less important than acknowledging the evolving reality of how content is created.